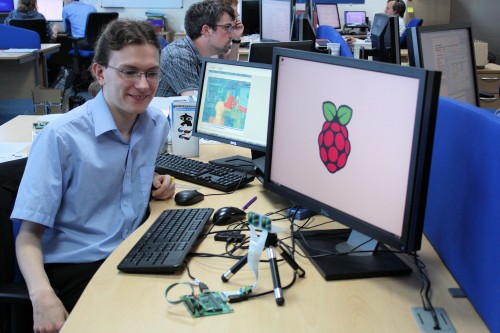

| Liz: We’ve got a number of good friends at Argon Design, a tech consultancy in Cambridge. (James Adams, our Director of Hardware, used to work there; as did my friend from the time of Noah, @eyebrowsofpower; the disgustingly clever Peter de Rivaz, who wrote Penguins Puzzle, is an Argon employee; and Steve Barlow, who heads Argon up, used to run AlphaMosaic, which became Broadcom’s Cambridge arm, and employed several of the people who work at Pi Towers back in the day.) We gave the Argon team a Compute Module to play with this summer, and they set David Barker, one of their interns, to work with it. Here’s what he came up with: thanks David, and thanks Argon! This summer I spent 11 weeks interning at a local tech company called Argon Design, working with the new Raspberry Pi Compute Module. “Local” in this case means Cambridge, UK, where I am currently studying for a mathematics degree. I found the experience extremely valuable and a lot of fun, and I have learnt a great deal about the hardware side of the Raspberry Pi. And here I would like to share a bit of what I did.

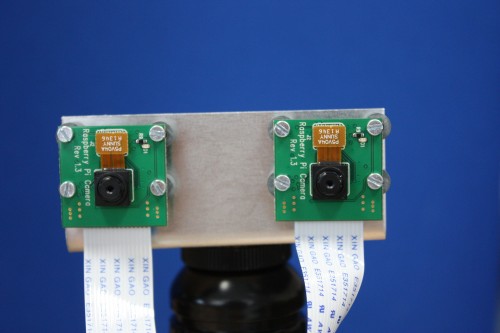

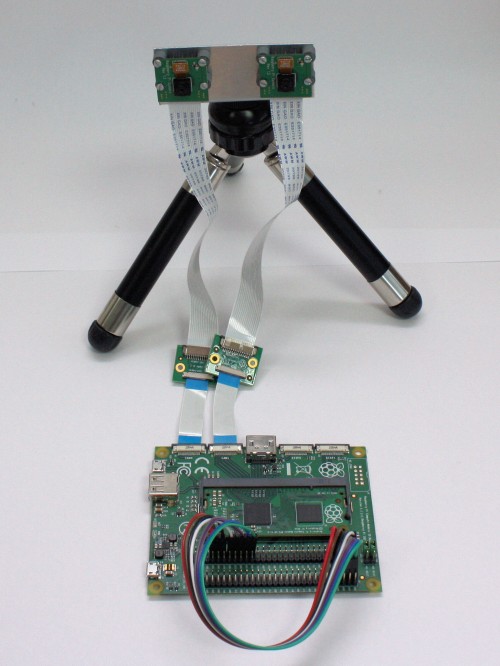

My assignment was to develop an example of real-time video processing on the Raspberry Pi. Argon know a lot about the Pi and its capabilities and are experts in real-time video processing, and we wanted to create something which would demonstrate both. The problem we settled on was depth perception using the two cameras on the Compute Module. The CTO, Steve Barlow, who has a good knowledge of stereo depth algorithms gave me a Python implementation of a suitable one.

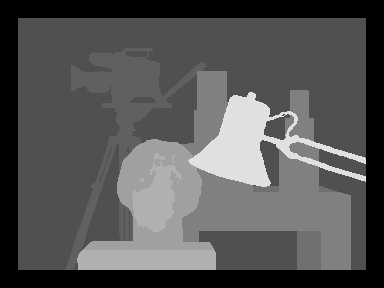

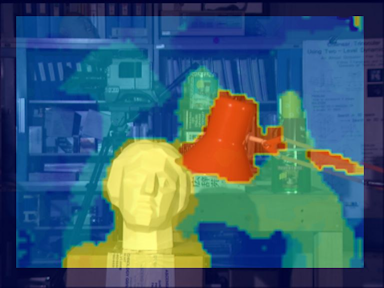

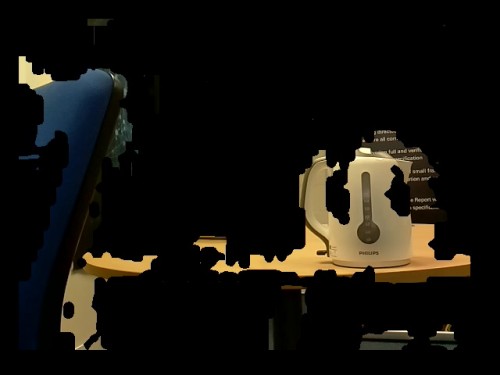

The algorithm we used is a variant of one which is widely used in video compression. The basic idea is to divide each frame into small blocks and to find the best match with blocks from other frames – this tells us how far the block has moved between the two images. The video version is designed to detect motion, so it tries to match against the previous few frames. Meanwhile, the depth perception version tries to match the left and right camera images against each other, allowing it to measure the parallax between the two images. The other main difference from video compression is that we used a different measure of correlation between blocks. The one we used is designed to work well in the presence of sharp edges and when the exposure differs between the cameras. This means that it is considerably more accurate, at the cost of being more expensive to calculate. When I arrived, my first task was to translate this algorithm from Python to C, to see what sort of speeds we could reasonably expect. While doing this, I made several algorithmic improvements. This turned out to be extremely successful – the final C version was over 1000 times as fast as the original Python version, on the same hardware! However, even with this much improvement, it was still taking around a second to process a moderate-sized image on the Pi’s ARM core. Clearly another approach was needed. There are two other processors on the Pi: a dual-core video processing unit called the VPU and a 12-core GPU, both of which are part of the VideoCore block. They both run at a relatively slow 250MHz, but are designed in such a way that they are actually much faster than the ARM core for video and imaging tasks. The team at Argon has done a lot of VideoCore programming and is familiar with how to get the best out of these processors. So I set about rewriting the program, from C into VPU assembler. This sped up the processing on the Pi to around 90 milliseconds. Dropping the size of the image slightly, we eventually managed to get the whole process – get image from cameras, process on VPU, display on screen – to run at 12fps. Not bad for 11 weeks’ work! I also coded up a demonstration app, which can do green-screen-free background removal, as well as producing false-colour depth maps. There are screenshots below; the results are not exactly perfect, but we are aware of several ways in which this could be improved. This was simply a matter of not having enough time – implementing the algorithm to the standard of a commercial product, rather than a proof-of-concept, would have taken quite a bit longer than the time I had for my internship. To demonstrate our results, we ran the algorithm on a standard image pair produced by the University of Tsukuba. Below are the test images, the exact depth map, and our calculated one.

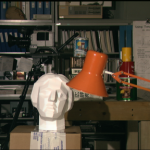

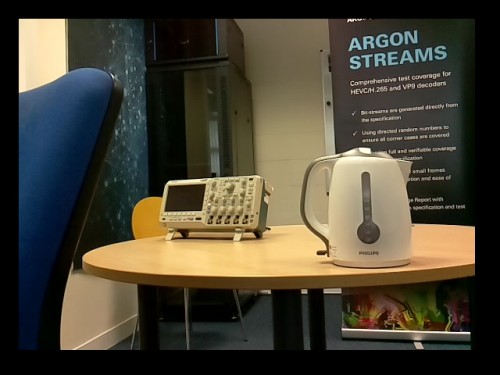

We also set up a simple scene in our office to test the results on some slightly more “real-world” data:

However, programming wasn’t the only task I had. I also got to design and build a camera mount, which was quite a culture shock compared to the software work I’m used to.

Liz: I know that stereo vision is something a lot of compute module customers have been interested in exploring. David has made a more technical write-up of this case study available on Argon’s website for those of you who want to look at this problem in more…depth. (Sorry.)

|

A Semi-automated Technology Roundup Provided by Linebaugh Public Library IT Staff | techblog.linebaugh.org

Tuesday, October 28, 2014

Real-time depth perception with the Compute Module

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment