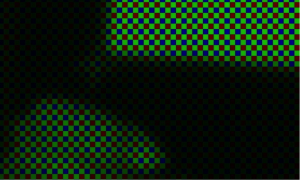

| Liz: We’re very close to being able to release the $25 add-on camera board for the Raspberry Pi now. David Plowman has been doing a lot of the work on imaging and tuning. He’s very kindly agreed to write a couple of guest posts for us explaining some more for the uninitiated about the process of engineering the camera module. Here’s the first – I hope you’ll find it as fascinating as I did. Thanks David! Lights! Camera! … Action? So you've probably all been wondering how it can take quite so long to get a little camera board working with the Raspberry Pi. Shouldn't it be like plugging in a USB webcam, all plug'n'play? Alas, it's not as straightforward as you might think. Bear with me for this – and a subsequent – blog posting and I'll try and explain all. The Nature of the Beast The camera we're attaching to the Raspberry Pi is a 5MP (2952×1944 pixels) Omnivision 5647 sensor in a fixed focus module. This is very typical of the kinds of units you'd see in some mid-range camera phones (you might argue the lack of autofocus is a touch low-end, but it does mean less work for us and you get your camera boards sooner!). Besides power, clock signals and so forth, we have two principal connections (or data buses in electronics parlance) between our processor (the BCM2835 on the Pi) and the camera. The first is the I2C ("eye-squared-cee") bus which is a relatively low bandwidth link that carries commands and configuration information from the processor to the image sensor. This is used to do things like start and stop the sensor, change the resolution it will output, and, crucially, to adjust the exposure time and gain applied to the image that the sensor is producing. The second connection is the CSI bus, a much higher bandwidth link which carries pixel data from the camera back to the processor. Both of these buses travel along the ribbon cable that attaches the camera board to your Pi. The astute amongst you will notice that there aren't all that many lines in the ribbon cable – and indeed both I2C and CSI are serial protocols for just this reason. The pixels produced are 10 bits wide rather than the 8 bits you're more used to seeing in your JPEGs. That's because we're ultimately going to adjust some parts of the dynamic range and we don't want "gaps" (which would become visible as "banding") to open up where the pixel values are stretched out. At 15fps (frames per second) that's a maximum of 2592x1944x10x15 bits per second (approximately 750Mbps). Actually many higher-end cameras will give you frames larger than this at up to 30fps, but still, this is no slouch! Show me some pictures! So, armed with our camera modules and adapter board, the next job we have is to write a device driver to translate our camera stack's view of the camera ("use this resolution", "start the camera" and so forth) into I2C commands that are meaningful to the image sensor itself. The driver has to play nicely with the camera stack's AEC/AGC (auto-exposure/auto-gain) algorithm whose job it is to drive the exposure of the image to the "Goldilocks" level – not too dark, not too bright. Perhaps some of you remember seeing one of Dom's early camera videos where there were clear "winks" and "wobbles" in brightness. These were caused by the driver not synchronising the requested exposure changes correctly with the firmware algorithms… you'll be glad to hear this is pretty much the first thing we fixed! With a working driver, we can now capture pixels from the camera. These pixels, however, do not constitute a beautiful picture postcard image. We get a raw pixel stream, even more raw, in fact, than in a DSLR's so-called raw image where certain processing has often already been applied. Here's a tiny crop from a raw image, greatly magnified to show the individual pixels.  Surprised? To make sense of this vast amount of strange pixel data the Broadcom GPU contains a special purpose Image Signal Processor (ISP), a very deep hardware pipeline tasked with the job of turning these raw numbers into something that actually looks nice. To accomplish this, the ISP will crunch tens of billions of calculations every second. Surprised? To make sense of this vast amount of strange pixel data the Broadcom GPU contains a special purpose Image Signal Processor (ISP), a very deep hardware pipeline tasked with the job of turning these raw numbers into something that actually looks nice. To accomplish this, the ISP will crunch tens of billions of calculations every second.

What do you mean, two-thirds of my pixels are made up? Yes, it is imaging's inconvenient truth that fully two-thirds of the colour values in an RGB image have been, well, we engineers prefer to use the word interpolated. An image sensor is a two dimensional array of photosites, and each photosite can sample only one number – either a red, a green or a blue value, but not all three. It was the idea of Bryce Bayer, working for Kodak back in 1976, to add an array of microlenses over the top so that each photosite can measure a different colour channel. The arrangement of reds, greens and blues that you see in the crop above is now referred to as a "Bayer pattern" and a special algorithm, often called a "demosaic algorithm", is used to create the fully-sampled RGB image. Notice how there are twice as many greens as reds or blues, because our eyes are far more sensitive to green light than to red or blue. The Bayer pattern is not without its problems, of course. Most obviously a large part of the incoming light is simply filtered out meaning they perform poorly in dark conditions. Mathematicians may also mutter darkly about "aliasing" which makes a faithful reconstruction of the original colours very difficult when there is high detail, but nonetheless the absolutely overwhelming majority of sensors in use today are of the Bayer variety. Now finally for today's posting, here's what the whole image looks like once it has been "demosaicked".  My eyes! They burn with the ugly! It's recognisable, that's about the kindest thing you can say, but hardly lovely – we would still seem to have some way to go. In my next posting, then, I'll initiate you into the arcane world of camera tuning… |

No comments:

Post a Comment